Capable of processing almost 60 trillion particles, this suite is the biggest cosmological simulation ever produced.

The creators of AbacusSummit announced the simulation suite in a series of documents that appeared in the Monthly Notices of the Royal Astronomical Society (MNRAS). Comprised of more than 160 simulations, it designs how particles behave in a box-shaped environment due to gravitational attraction. These models are called N-body simulations and are intrinsic to modeling how dark matter communicates with baryonic (aka. “noticeable”) matter.

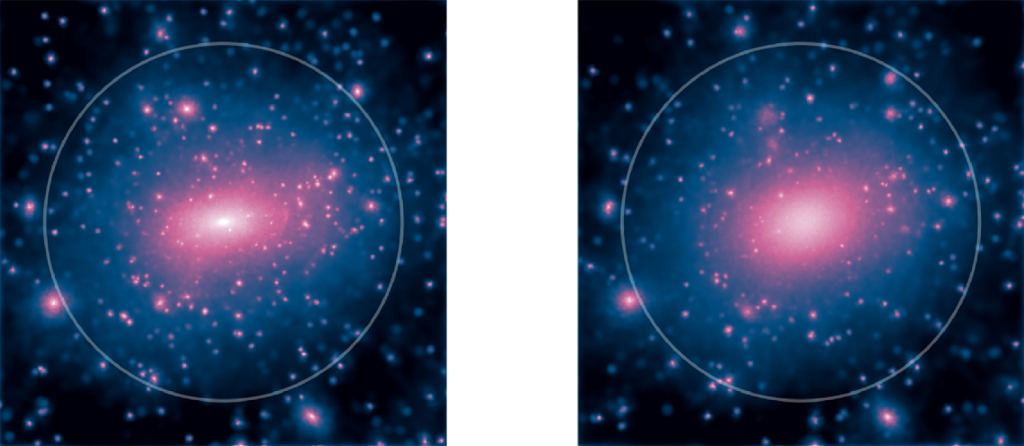

The simulated circulation of dark matter in galaxies. Credit: Brinckmann et al

. The advancement of the AbacusSummit simulation suite was led by Lehman Garrison (a CCA research study fellow) and Nina Maksimova and Daniel Eisenstein, a graduate student and professor of astronomy with the CfA (respectively). The simulations were worked on the Summit supercomputer at the Oak Ridge Leadership Computing Facility (ORLCF) in Tennessee– supervised by the U.S. Department of Energy (DoE).

N-body computations, which include calculating the gravitational interaction of planets and other items, are amongst the best obstacles facing astrophysicists today. Part of what makes it overwhelming is that each object communicates with every other item, no matter how far apart they are– the more things under study, the more interactions that need to be accounted for.

To date, there is still no option for N-body issues where 3 or more enormous bodies are involved, and the estimations readily available are simple approximations. For example, the mathematics for calculating the interaction of three bodies, such as a binary star system and a world (called the “Three-Body Problem”), is yet to be resolved. A typical method with cosmological simulations is stopping the clock, determining the total force acting upon each object, moving time ahead slowly, and repeating.

For the sake of their research study (which was led by Maksimova), the team created their codebase (called Abacus) to benefit from Summits parallel processing power– whereby multiple estimations can run concurrently. They also depend on device learning algorithms and a new mathematical approach, which enabled them to calculate 70 million particles per node/s at early times and 45 million particle updates per node/s at late times.

A photo of one of the AbacusSummit simulations, revealed at various zoom scales: 10 billion light-years throughout, 1.2 billion light-years across, and 100 million light-years throughout. Credit: The AbacusSummit Team/ layout by Lucy Reading-Ikkanda/Simons Foundation

As Garrison explained in a current CCA news release:

” This suite is so big that it probably has more particles than all the other N-body simulations that have actually ever been run integrated– though thats a hard statement to be certain of. The galaxy surveys are delivering tremendously detailed maps of deep space, and we require likewise enthusiastic simulations that cover a wide range of possible universes that we might live in.

” AbacusSummit is the very first suite of such simulations that has the breadth and fidelity to compare to these remarkable observations … Our vision was to create this code to deliver the simulations that are needed for this particular brand-new brand name of galaxy study. We wrote the code to do the simulations much faster and far more properly than ever previously.”

In addition to the typical challenges, running full simulations of N-body estimations needs that algorithms be thoroughly designed because of all the memory storage included. This suggests that Abacus could not make copies of the simulation for various supercomputer nodes to work on and divided each simulation into a grid instead. This consists of making approximate estimations for distant particles, which play a smaller sized role than close-by ones.

It then splits off the nearby particles into numerous cells so the computer system can work on each separately, then integrates the outcomes of each with the approximation of far-off particles. The research study group found that this approach (consistent departments) makes better usage of parallel processing and permits a large amount of the distant-particle approximation to be computed before the simulation begins.

Abacus parallel computer processing, imagined. Credit: Lucy Reading-Ikkanda/Simons Foundation

This is a significant improvement to other N-body codebases, which irregularly divide simulations based upon the circulation of particles. Thanks to its design, Abacus can update 70 million particles per node/second (where each particle represents a clump of Dark Matter with three billion solar masses). It can likewise evaluate the simulation as its running and search for spots of Dark Matter that indicate the existence of brilliant star-forming galaxies.

These and other cosmological objects will be the topic of future studies that map the cosmos in unmatched information. These include the Dark Energy Spectroscopic Instrument (DESI), the Nancy Grace Roman Space Telescope (RST), and the ESAs Euclid spacecraft. One of the goals of these big-budget missions is to improve evaluations of the cosmic and astrophysical parameters that identify how deep space acts and how it looks.

This, in turn, will permit more detailed simulations that use updated worths for various specifications, such as Dark Energy. Daniel J. Eisenstein, a scientist with the CfA and a co-author on the paper, is also a member of the DESI partnership. He and others like him are anticipating what Abacus can do for these cosmological studies in the coming years.

” Cosmology is leaping forward since of the multidisciplinary fusion of amazing observations and advanced computing,” he said. “The coming decade promises to be a magnificent age in our study of the historical sweep of the universe.”

Additional Reading: Simons Foundation, MNRAS

Like this: Like Loading …

Made up of more than 160 simulations, it models how particles act in a box-shaped environment due to gravitational attraction. These models are known as N-body simulations and are intrinsic to modeling how dark matter interacts with baryonic (aka. A typical approach with cosmological simulations is stopping the clock, calculating the overall force acting on each things, moving time ahead gradually, and repeating.

It can also analyze the simulation as its running and search for patches of Dark Matter that indicate the presence of brilliant star-forming galaxies.

Today, the biggest mysteries dealing with cosmologists and astronomers are the roles gravitational destination and cosmic expansion play in the advancement of deep space. To solve these secrets, cosmologists and astronomers are taking a two-pronged approach. These consist of directly observing the cosmos to observe these forces at work while attempting to discover theoretical resolutions for observed behaviors– such as Dark Matter and Dark Energy.

In in between these 2 techniques, researchers model cosmic development with computer system simulations to see if observations align with theoretical forecasts. The current of which is AbacusSummit, a simulation suite developed by the Flatiron Institutes Center for Computational Astrophysics (CCA) and the Harvard-Smithsonian Center for Astrophysics (CfA). Capable of processing nearly 60 trillion particles, this suite is the biggest cosmological simulation ever produced.