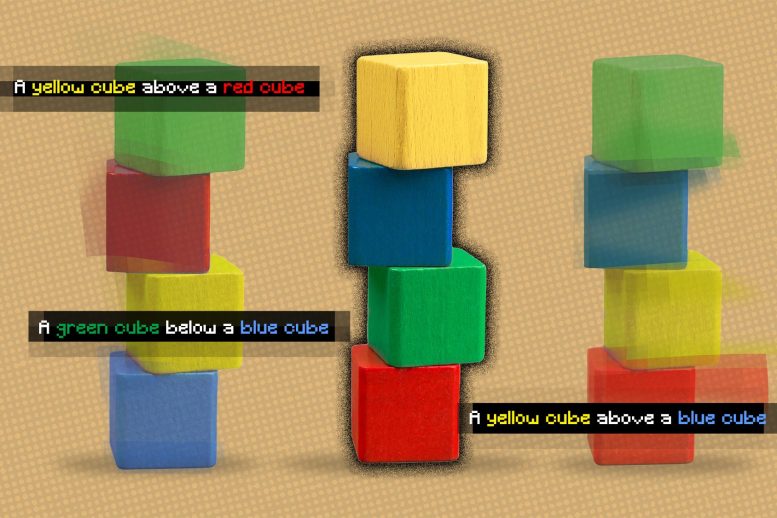

MIT scientists have actually established an artificial intelligence model that understands the underlying relationships in between things in a scene and can generate accurate pictures of scenes from text descriptions. Credit: Jose-Luis Olivares, MIT, and iStockphoto

A brand-new machine-learning model could enable robots to comprehend interactions on the planet in the method humans do.

They see things and the relationships between them when people look at a scene. On top of your desk, there might be a laptop computer that is sitting to the left of a phone, which remains in front of a computer screen.

Due to the fact that they dont understand the entangled relationships in between individual things, numerous deep learning designs have a hard time to see the world this method. Without knowledge of these relationships, a robot created to help somebody in a cooking area would have difficulty following a command like “get the spatula that is to the left of the stove and location it on top of the cutting board.”

In an effort to fix this issue, MIT researchers have actually developed a model that understands the underlying relationships in between items in a scene. Their design represents individual relationships one at a time, then integrates these representations to explain the general scene. This allows the model to produce more precise images from text descriptions, even when the scene includes a number of items that are arranged in various relationships with one another.

This work might be used in situations where commercial robotics should perform detailed, multistep adjustment jobs, like stacking items in a storage facility or putting together devices. It likewise moves the field one action better to making it possible for makers that can find out from and interact with their environments more like people do.

The framework the scientists established can create a picture of a scene based on a text description of objects and their relationships, In this figure, researchers final image is on the right and properly follows the text description. Credit: Courtesy of the researchers

” When I take a look at a table, I cant say that there is a things at XYZ place. Our minds dont work like that. In our minds, when we understand a scene, we really understand it based upon the relationships in between the items. We think that by developing a system that can comprehend the relationships in between items, we might utilize that system to more effectively control and alter our environments,” states Yilun Du, a PhD student in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and co-lead author of the paper.

Du wrote the paper with co-lead authors Shuang Li, a CSAIL PhD student, and Nan Liu, a graduate trainee at the University of Illinois at Urbana-Champaign; along with Joshua B. Tenenbaum, the Paul E. Newton Career Development Professor of Cognitive Science and Computation in the Department of Brain and Cognitive Sciences and a member of CSAIL; and senior author Antonio Torralba, the Delta Electronics Professor of Electrical Engineering and Computer Science and a member of CSAIL. The research study will be presented at the Conference on Neural Information Processing Systems in December.

One relationship at a time

The structure the scientists developed can generate a picture of a scene based on a text description of items and their relationships, like “A wood table to the left of a blue stool. A red couch to the right of a blue stool.”

Their system would break these sentences down into 2 smaller pieces that describe each specific relationship (” a wood table to the left of a blue stool” and “a red couch to the right of a blue stool”), and after that model each part independently. Those pieces are then integrated through an optimization process that generates an image of the scene.

In this figure, the researchers final images are labeled “ours.” Credit: Courtesy of the researchers

The researchers used a machine-learning strategy called energy-based models to represent the private things relationships in a scene description. This technique allows them to use one energy-based design to encode each relational description, and after that compose them together in a manner that infers all relationships and items.

By breaking the sentences down into much shorter pieces for each relationship, the system can recombine them in a range of methods, so it is better able to adjust to scene descriptions it hasnt seen before, Li explains.

” Other systems would take all the relations holistically and create the image one-shot from the description. Such methods fail when we have out-of-distribution descriptions, such as descriptions with more relations, considering that these model cant actually adapt one shot to produce images containing more relationships. Nevertheless, as we are making up these separate, smaller models together, we can design a larger variety of relationships and adapt to unique combinations,” Du says.

The system likewise works in reverse– provided an image, it can find text descriptions that match the relationships between things in the scene. In addition, their design can be utilized to modify an image by rearranging the items in the scene so they match a brand-new description.

Comprehending intricate scenes

The researchers compared their design to other deep learning methods that were given text descriptions and entrusted with producing images that showed the matching items and their relationships. In each instance, their design outshined the standards.

They likewise asked human beings to evaluate whether the produced images matched the initial scene description. In the most intricate examples, where descriptions included three relationships, 91 percent of individuals concluded that the brand-new model performed better.

” One interesting thing we discovered is that for our design, we can increase our sentence from having one relation description to having two, or 3, and even 4 descriptions, and our technique continues to be able to produce images that are properly described by those descriptions, while other approaches fail,” Du says.

The scientists also revealed the model images of scenes it had not seen prior to, along with several different text descriptions of each image, and it was able to effectively identify the description that finest matched the object relationships in the image.

And when the researchers offered the system 2 relational scene descriptions that explained the same image but in various methods, the design was able to understand that the descriptions were equivalent.

The scientists were impressed by the robustness of their design, especially when working with descriptions it hadnt experienced prior to.

” This is really promising because that is closer to how humans work. Humans may just see several examples, however we can draw out useful information from simply those few examples and combine them together to produce limitless combinations. And our design has such a property that enables it to find out from fewer information however generalize to more complex scenes or image generations,” Li says.

While these early outcomes are encouraging, the scientists wish to see how their design carries out on real-world images that are more intricate, with loud backgrounds and items that are blocking one another.

They are likewise thinking about ultimately integrating their design into robotics systems, making it possible for a robotic to presume object relationships from videos and then apply this understanding to control objects in the world.

” Developing visual representations that can handle the compositional nature of the world around us is one of the key open issues in computer vision. This paper makes considerable progress on this problem by proposing an energy-based design that clearly designs several relations amongst the things depicted in the image. The outcomes are actually excellent,” says Josef Sivic, a prominent scientist at the Czech Institute of Informatics, Robotics, and Cybernetics at Czech Technical University, who was not included with this research study.

Reference: “Learning to Compose Visual Relations” by Nan Liu, Shuang Li, Yilun Du, Joshua B. Tenenbaum and Antonio Torralba, NeurIPS 2021 (Spotlight). GitHub.

This research study is supported, in part, by Raytheon BBN Technologies Corp., Mitsubishi Electric Research Laboratory, the National Science Foundation, the Office of Naval Research, and the IBM Thomas J. Watson Research Center.

In an effort to resolve this problem, MIT scientists have actually established a model that understands the underlying relationships in between things in a scene. Their design represents private relationships one at a time, then combines these representations to explain the total scene. Such techniques stop working when we have out-of-distribution descriptions, such as descriptions with more relations, since these design cant truly adapt one shot to produce images consisting of more relationships. As we are composing these separate, smaller designs together, we can model a bigger number of relationships and adapt to unique combinations,” Du states.

And our model has such a home that allows it to find out from less data but generalize to more complex scenes or image generations,” Li states.