In a neural network trained to recognize animals in images, their method may describe a certain neuron as detecting ears of foxes. Their scalable method has the ability to produce more particular and accurate descriptions for private neurons than other techniques.

In a brand-new paper, the team shows that this technique can be utilized to investigate a neural network to determine what it has discovered, or perhaps edit a network by recognizing and then switching off unhelpful or inaccurate neurons.

” We desired to create a technique where a machine-learning specialist can offer this system their model and it will tell them everything it understands about that model, from the viewpoint of the models nerve cells, in language. This helps you answer the standard concern, Exists something my design understands about that I would not have anticipated it to know?” says Evan Hernandez, a graduate trainee in the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) and lead author of the paper.

Co-authors consist of Sarah Schwettmann, a postdoc in CSAIL; David Bau, a current CSAIL graduate who is an inbound assistant teacher of computer technology at Northeastern University; Teona Bagashvili, a former going to trainee in CSAIL; Antonio Torralba, the Delta Electronics Professor of Electrical Engineering and Computer Science and a member of CSAIL; and senior author Jacob Andreas, the X Consortium Assistant Professor in CSAIL. The research will exist at the International Conference on Learning Representations.

Automatically generated descriptions

A lot of existing methods that assist machine-learning professionals comprehend how a model works either describe the entire neural network or require scientists to determine principles they think specific nerve cells could be concentrating on.

The system Hernandez and his partners developed, called MILAN (mutual-information assisted linguistic annotation of nerve cells), surpasses these approaches because it does not need a list of concepts in advance and can instantly generate natural language descriptions of all the nerve cells in a network. This is especially crucial since one neural network can consist of hundreds of thousands of private nerve cells.

MILAN produces descriptions of nerve cells in neural networks trained for computer vision jobs like object recognition and image synthesis. To describe a given nerve cell, the system first inspects that nerve cells behavior on thousands of images to find the set of image regions in which the nerve cell is most active.

” In a neural network that is trained to categorize images, there are going to be lots of various neurons that find canines. Even though pet might be a precise description of a lot of these neurons, it is not extremely informative.

The team compared MILAN to other designs and discovered that it generated richer and more precise descriptions, but the researchers were more interested in seeing how it could help in answering particular concerns about computer system vision designs.

Analyzing, auditing, and editing neural networks

Initially, they utilized MILAN to examine which neurons are crucial in a neural network. They produced descriptions for every single neuron and arranged them based on the words in the descriptions. They slowly got rid of nerve cells from the network to see how its precision altered, and discovered that neurons that had 2 very different words in their descriptions (fossils and vases, for example) were lesser to the network.

They likewise used MILAN to examine models to see if they found out something unforeseen. The researchers took image classification models that were trained on datasets in which human faces were blurred out, ran MILAN, and counted the number of nerve cells were however conscious human faces.

” Blurring the faces in this way does reduce the number of neurons that are delicate to faces, however far from removes them. As a matter of fact, we hypothesize that some of these face neurons are very delicate to particular group groups, which is quite unexpected. These models have never seen a human face prior to, and yet all kinds of facial processing happens inside them,” Hernandez says.

In a third experiment, the group utilized MILAN to modify a neural network by finding and getting rid of neurons that were finding bad connections in the data, which led to a 5 percent boost in the networks precision on inputs showing the problematic connection.

While the researchers were impressed by how well MILAN performed in these 3 applications, the model sometimes provides descriptions that are still too vague, or it will make an incorrect guess when it doesnt understand the concept it is expected to recognize.

They are planning to resolve these restrictions in future work. They also want to continue improving the richness of the descriptions MILAN is able to create. They hope to apply MILAN to other kinds of neural networks and use it to describe what groups of neurons do, given that nerve cells work together to produce an output.

” This is a technique to interpretability that begins with the bottom up. The objective is to generate open-ended, compositional descriptions of function with natural language. We want to take advantage of the meaningful power of human language to generate descriptions that are a lot more abundant and natural for what nerve cells do. Having the ability to generalize this method to various kinds of models is what I am most thrilled about,” says Schwettmann.

” The ultimate test of any technique for explainable AI is whether it can assist users and researchers make much better decisions about when and how to release AI systems,” states Andreas. “Were still a long way off from being able to do that in a basic method. Im optimistic that MILAN– and the use of language as an explanatory tool more broadly– will be an useful part of the toolbox.”

Recommendation: “Natural Language Descriptions of Deep Visual Features” by Evan Hernandez, Sarah Schwettmann, David Bau, Teona Bagashvili, Antonio Torralba and Jacob Andreas, 26 January 2022, Computer Science > > Computer Vision and Pattern Recognition.arXiv:2201.11114.

This work was moneyed, in part, by the MIT-IBM Watson AI Lab and the [email safeguarded] initiative.

MIT researchers created a method that can automatically describe the functions of specific neurons in a neural network with natural language. MILAN produces descriptions of nerve cells in neural networks trained for computer vision jobs like things recognition and image synthesis. To explain an offered neuron, the system first inspects that nerve cells behavior on thousands of images to find the set of image regions in which the neuron is most active. They slowly removed nerve cells from the network to see how its precision altered, and found that nerve cells that had two very different words in their descriptions (vases and fossils, for instance) were less crucial to the network.

They hope to apply MILAN to other types of neural networks and use it to describe what groups of nerve cells do, because nerve cells work together to produce an output.

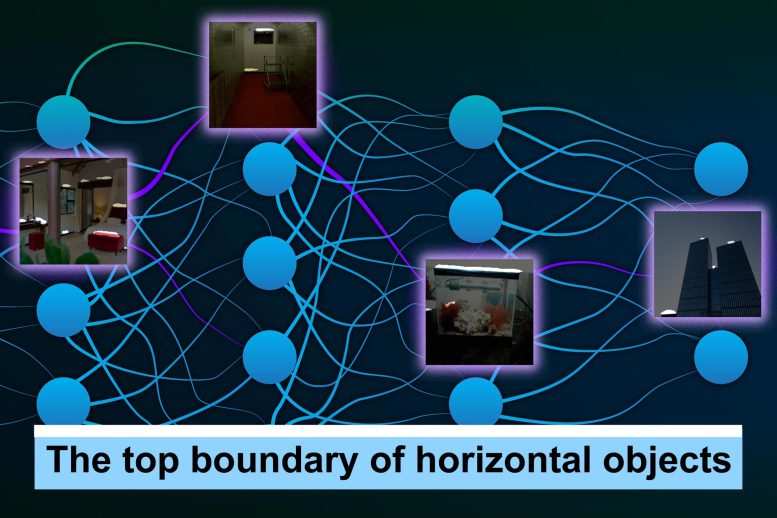

MIT scientists created a technique that can instantly describe the functions of individual neurons in a neural network with natural language. In this figure, the method had the ability to recognize “the top limit of horizontal things” in pictures, which are highlighted in white. Credit: Photographs thanks to the researchers, edited by Jose-Luis Olivares, MIT

A new method instantly explains, in natural language, what the individual parts of a neural network do.

Neural networks are sometimes called black boxes since, regardless of the truth that they can outperform human beings on certain tasks, even the researchers who design them often do not comprehend how or why they work so well. If a neural network is used outside the lab, maybe to categorize medical images that could help diagnose heart conditions, knowing how the design works helps researchers forecast how it will behave in practice.

MIT scientists have now developed a method that sheds some light on the inner workings of black box neural networks. Designed off the human brain, neural networks are set up into layers of interconnected nodes, or “nerve cells,” that procedure data. The new system can immediately produce descriptions of those private neurons, created in English or another natural language.