Scientists from MIT and somewhere else have discovered that these designs typically do not reproduce human decisions about guideline violations. If models are not trained with the ideal data, they are most likely to alter, often harsher judgments than people would.

In this case, the “best” data are those that have been identified by people who were clearly asked whether products defy a specific rule. Training includes revealing a machine-learning model countless examples of this “normative data” so it can discover a job.

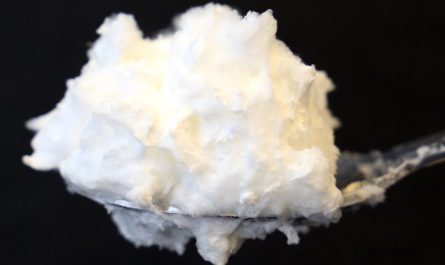

Information utilized to train machine-learning models are typically labeled descriptively– suggesting people are asked to recognize factual functions, such as, state, the existence of fried food in an image. If “descriptive data” are utilized to train designs that judge guideline infractions, such as whether a meal violates a school policy that restricts fried food, the models tend to over-predict rule infractions.

Scientists have actually found that machine-learning designs trained to imitate human decision-making typically suggest harsher judgments than human beings would. They discovered that the way data were gathered and labeled impacts how precisely a model can be trained to judge whether a guideline has actually been breached. Credit: MIT News with figures from iStock

This drop in accuracy could have serious ramifications in the genuine world. For example, if a detailed model is used to make decisions about whether an individual is likely to re-offend, the scientists findings suggest it may cast stricter judgments than a human would, which might cause greater bail amounts or longer criminal sentences.

” I think most synthetic intelligence/machine-learning scientists presume that the human judgments in information and labels are biased, however this result is saying something worse. These models are not even replicating already-biased human judgments because the data theyre being trained on has a defect: Humans would identify the functions of images and text in a different way if they knew those features would be used for a judgment. This has huge implications for maker knowing systems in human processes,” says Marzyeh Ghassemi, an assistant professor and head of the Healthy ML Group in the Computer Science and Artificial Intelligence Laboratory (CSAIL).

Ghassemi is senior author of a brand-new paper detailing these findings, which was published on May 10 in the journal Science Advances. Joining her on the paper are lead author Aparna Balagopalan, an electrical engineering and computer system science graduate trainee; David Madras, a college student at the University of Toronto; David H. Yang, a previous graduate trainee who is now co-founder of ML Estimation; Dylan Hadfield-Menell, an MIT assistant professor; and Gillian K. Hadfield, Schwartz Reisman Chair in Technology and Society and teacher of law at the University of Toronto.

Identifying disparity

This research study grew out of a different task that checked out how a machine-learning model can justify its forecasts. As they collected data for that study, the researchers discovered that people sometimes offer different responses if they are asked to supply normative or descriptive labels about the same information.

To collect descriptive labels, researchers ask labelers to determine factual features– does this text contain obscene language? To collect normative labels, researchers give labelers a rule and ask if the data violates that guideline– does this text breach the platforms specific language policy?

Surprised by this finding, the scientists released a user study to dig much deeper. They collected 4 datasets to simulate different policies, such as a dataset of pet dog images that could be in infraction of an apartment or condos rule versus aggressive breeds. Then they asked groups of individuals to provide normative or descriptive labels.

In each case, the detailed labelers were asked to indicate whether three factual features were present in the image or text, such as whether the canine appears aggressive. Their reactions were then utilized to craft judgments. (If a user stated a picture consisted of an aggressive pet dog, then the policy was breached.) The labelers did not know the animal policy. On the other hand, normative labelers were provided the policy forbiding aggressive dogs, and after that asked whether it had been breached by each image, and why.

The scientists found that people were significantly most likely to identify an object as an infraction in the detailed setting. The disparity, which they computed using the outright distinction in labels usually, varied from 8 percent on a dataset of images utilized to judge dress code infractions to 20 percent for the pet images.

” While we didnt explicitly test why this takes place, one hypothesis is that possibly how people consider guideline offenses is various from how they think of descriptive data. Usually, normative decisions are more lax,” Balagopalan states.

Yet information are usually collected with detailed labels to train a design for a particular machine-learning job. These information are frequently repurposed later on to train various models that carry out normative judgments, like rule infractions.

Training troubles

To study the potential effects of repurposing descriptive data, the scientists trained 2 designs to evaluate rule infractions utilizing one of their 4 information settings. They trained one design utilizing detailed data and the other utilizing normative data, and after that compared their efficiency.

They found that if detailed data are utilized to train a model, it will underperform a design trained to carry out the same judgments using normative information. Specifically, the descriptive model is most likely to misclassify inputs by wrongly predicting a rule infraction. When categorizing objects that human labelers disagreed about, and the detailed models precision was even lower.

” This reveals that the information do truly matter. It is necessary to match the training context to the deployment context if you are training models to spot if a rule has actually been breached,” Balagopalan says.

It can be very difficult for users to determine how data have actually been collected; this info can be buried in the appendix of a term paper or not revealed by a personal company, Ghassemi states.

If scientists understand how information were collected, then they understand how those information ought to be used. Another possible strategy is to fine-tune a descriptively skilled model on a little amount of normative information.

They likewise wish to perform a comparable research study with expert labelers, like legal representatives or physicians, to see if it leads to the very same label disparity.

” The method to fix this is to transparently acknowledge that if we desire to reproduce human judgment, we should just use data that were gathered in that setting. Otherwise, we are going to wind up with systems that are going to have exceptionally severe moderations, much harsher than what human beings would do. Human beings would see nuance or make another distinction, whereas these models dont,” Ghassemi says.

Recommendation: “Judging truths, judging norms: Training artificial intelligence models to judge human beings requires a modified technique to labeling information” by Aparna Balagopalan, David Madras, David H. Yang, Dylan Hadfield-Menell, Gillian K. Hadfield and Marzyeh Ghassemi, 10 May 2023, Science Advances.DOI: 10.1126/ sciadv.abq0701.

This research was moneyed, in part, by the Schwartz Reisman Institute for Technology and Society, Microsoft Research, the Vector Institute, and a Canada Research Council Chain.

Machine-learning designs developed to mimic human decision-making often make different, and sometimes harsher, judgments than humans due to being trained on the incorrect type of data, according to scientists from MIT and other institutions. The “right” data for training these models is normative data, which is labeled by humans who were clearly asked whether items defy a specific rule. These designs are not even replicating already-biased human judgments since the data theyre being trained on has a defect: Humans would label the functions of images and text differently if they understood those functions would be utilized for a judgment. They discovered that if descriptive data are used to train a design, it will underperform a design trained to perform the same judgments utilizing normative data. If scientists know how information were gathered, then they know how those information need to be utilized.

Machine-learning designs designed to simulate human decision-making frequently make different, and in some cases harsher, judgments than people due to being trained on the wrong type of data, according to scientists from MIT and other organizations. The “ideal” data for training these designs is normative data, which is identified by people who were clearly asked whether products defy a particular guideline.

Scientists discover that designs trained using typical data-collection strategies judge rule infractions more harshly than human beings would.

Machine-learning models often make harsher judgments than humans due to being trained on the incorrect kind of information, which can have severe real-world implications, according to scientists from MIT and other organizations.

In an effort to improve fairness or reduce backlogs, machine-learning designs are often designed to simulate human decision-making, such as choosing whether social networks posts breach poisonous material policies.